A Redefinition of the Derivative and the Integral

why

the calculus works and why it doesn't

| Please note that this paper is a simplification by me of a paper or papers written and copyrighted by Miles Mathis on his site. I have replaced "I" and "my" with "MM" to show that he is talking. All links within the papers, not yet simplified, are linked directly to the Miles Mathis site and will appear in another tab. (It will be clear which of these are Miles Mathis originals because they will be still contain "I" and "my".) The original papers on his site are the ultimate and correct source. All contributions to his papers and ordering of his books should be made on his site. (This paper incorporates the last part of Miles Mathis' calcsimp paper, are paper, power paper, calculus flaw paper and varacc paper). |

First draft begun December 2002. First finished draft May 2003 (in my files). That draft submitted to American Mathematical Society August 2003. This is the extended draft of 2004, updated several times since then

Newton Leibniz Cauchy

ObjectivesTo prove:

Index

|

Preface - Calculus Simplified

This is from the paper calcsimp, 2006

The long paper which follows this preface which also included a section on the point which was split out into a separate paper: A Physical Point has No Dimensions tackles a large number of problems that have accumulated over hundreds of years and is therefore quite intimidating. Since the wrong calculus is taught in school, the reader may wonder why it is necessary to have the subject redefined, especially since it already scary.

For this reason this shorter and simplified preface is necessary. What Miles Mathis plans to do here is try to sell his idea to a hypothetical reader, a reader who is just entering first-semester calculus. MM will explain to him or her why his explanation is necessary, why it is better, and why he or she should prefer to take a course based on his explanation rather than a course based on current theory. In doing this, it will be shown that current notation and the current method of teaching calculus is a gigantic mess. In a hundred years, all educated people will look back and wonder how calculus could exist, and be taught, in such a confusing manner. They will wonder how such basic math, so easily understood, could have remained in a halfway state for so many centuries. The current notation and derivation for the equations of calculus will look to them like the leeches that doctors used to put on patients, as an all-round cure, or like the holes they drilled in the head to cure headache. Many students have felt that learning calculus is like having holes drilled in their heads, and in that they were right to feel that way.

What some of you students have no doubt already felt is that the further along in math you get, the more math starts to seem like a trick. When you first start out, math is pretty easy, since it makes sense. You do not just learn an equation. No, you learn an equation and you learn why the equation makes sense. You do not just acquire a fact, you acquire understanding. For example, when you learn addition, you do not just learn how to use a plus sign. You also learn why the sign works. You are shown the two apples and the one apple, and then you put them together to get three apples. You see the three apples and you go, “Aha, now I see!” Addition makes sense to you. It does not just work. You fully understand why it works.

Geometry is also understood by most students, since geometry is a physical math. You have pictures you can look at and line segments you can measure and so on, so it never feels like some kind of magic. If your trig teacher was a good teacher, you may have felt this way about trig as well. The sine and cosine stuff seems a bit abstract at first, but sooner or later, by looking at triangles and circles, it may dawn on you that everything makes absolute sense.

Algebra is the next step, and many people get lost there. But if you can get your head around the idea of a variable, you are halfway home.

But when we get to calculus, everyone gets swamped. Notice that MM did not say, “almost everyone.” No, he said everyone. Even the biggest nerd with the thickest glasses who gets A’s on every paper is completely confused. Those who do well in their first calculus courses are the ones that just memorize the equations and do not ask any questions.

One reason for this is that with calculus you will be given some new signs, and these signs will not really make sense in the old ways. You will be given an arrow pointing at zero, and this little arrow and zero will be underneath variables or next to big squiggly lines. This arrow and zero are supposed to mean, “let the variable or function approach zero,” but your teacher probably will not have time to really make you understand what a function is or why anyone wanted it to approach zero in the first place. Your teacher would answer such a question by saying, “Well, we just let it go toward zero and then see what happens. What happens is that we get a solution. We want a solution, do not we? If going to zero gives us a solution, then we are done. You cannot ask questions in math beyond that.”

Well, if you teacher says that to you, you can tell your teacher does not have a clue either. Math is not just memorizing equations, it is understanding equations. All math, no matter how difficult, is capable of being understood in the same way that 2+2=4 can be understood; and if your teacher cannot explain it to you, then he or she does not understand it.

What is happening with calculus is that you are taking your first step into a new kind of math and science. It is a kind of faith-based math. Almost everything you will learn from now on is math of this sort. You will not have time to understand it, therefore you must accept it and move on. Unless you plan to become a professor of the history of math, you will not have time to get to the roots of the thing and really make sense of it in your head.

No one understands or ever understood calculus, not Einstein, not Cauchy, not Cantor, not Russell, not Bohr, not Feynman, no one. Not even Leibniz or Newton understood it. That is a big statement, but MM will prove it here, not with philosophy, but by a simple 'magic' table. Once you see the proof you will know that no understood it because, if they did, they would have corrected it like MM is about to.

It is clear that math after calculus is faith-based. Just listen a quote of Richard Feynman, who is probably the most famous physicist after Einstein, having got a lot of attention in the second half of the 20th century as one of the fathers of Quantum Electrodynamics. One of his most quoted quotes is, “Shut up and calculate!” Meaning, “Don’t ask questions. Don’t try to understand it. Accept that the equation works and memorize it. The equation works because it matches experiment. There is no understanding beyond that.”

All of QED is based on this same idea, which started with Heisenberg and Bohr back in the early 1900’s. “The physics and math are not understandable, in the normal way, so do not ask stupid questions like that any more.” This last sentence is basically the short form of what is called the Copenhagen Interpretation of quantum dynamics. The Copenhagen Interpretation applies to just about everything now, not just QED. It also applies to Relativity, in which the paradoxes must simply be accepted, whether they make sense or not. And you might say that it also applies to calculus. Historically, your professors have accepted the Copenhagen Interpretation of calculus, and this interpretation states that students’ questions cannot be answered.

You will be taught to understand calculus like your teacher understands it, and if your teacher is very smart he understands it like Newton understood it. He will have memorized Newton’s or Cauchy’s derivation and will be able to put it on the blackboard for you. But this derivation will not make sense like 2+2=4 makes sense, and so you will still be confused. If you continue to ask questions, you will be read the Copenhagen Interpretation, or some variation of it. You will be told to "shut up and calculate."

In the first semester of calculus you will learn differential calculus. The amazing thing is that you will probably make it to the end of the semester without ever being told what a differential is. Most mathematicians learn that differential calculus is about solving certain sorts of problems using a derivative, and later courses called “differential equations” are about solving more difficult problems in the same basic way. However, most never think about what a differential is, outside of calculus.

MM did not ever think about what a differential was until later, and he is not alone. This is clear because when MM tells people that his new calculus is based on a constant differential instead of a diminishing differential, they look at him like he just started speaking Japanese with a Dutch accent. For them, a differential is a calculus term, and in calculus the differentials are always getting smaller. They cannot imagine what a constant differential is.

A differential is one number subtracted from another number: (2-1) is a differential. So is (x-y). A “differential” is just a fancier term for a “difference”. A differential is written as two terms and a minus sign, but as a whole, a differential stands for one number. The differential (2-1) is obviously just 1, for example. So you can see that a differential is a useful expansion. It is one number written in a longer form. You can write any number as a differential. The number five can be written as (8-3), or in a multitude of other ways.

We may want to write a single number as a differential because it allows us to define that differential as some useful physical parameter. For instance, a differential is most often a length. Say you have a ruler. Go to the 2-inch mark. Now go to the 1-inch mark. What is the difference between the two marks? It is one inch, which is a length. (2-1) may be a length. (x-y) may also be a length. In pure math, we have no lengths, of course, but in math applied to physics, a differential is very often a length.

The problem is that modern mathematicians do not like to teach you math by drawing you pictures. They do not like to help you understand concepts by having you imagine rulers or lengths or other physical things. They want you to get used to the idea of math as completely pure. They tell you that it is for your own good. They make you feel like physical ideas are equivalent to pacifiers: you must grow up and get rid of them. But the real reason is that, starting with calculus, they can no longer draw you meaningful pictures. They are not able to make you understand, so they tell you to shut up and calculate.

It is kind of like the wave/particle duality, another famous concept you have probably already heard of. Light is supposed to act like a particle sometimes and like a wave at other times. No one has been able to draw a picture of light that makes sense of this, so we are told that it cannot be done. But in another one of his papers MM has drawn a picture of light that makes sense of this, and in this paper MM will show you a pretty little graph that makes perfect sense of the calculus. You will be able to look at the graph with your own eyes and you will see where the numbers are coming from, and you will say, “Aha, I understand. That was easy!” (See The Probability Wave of QM is not reality.)

There is basically only one equation that you learn in your first semester of calculus. All the other equations are just variations and expansions of the one equation. This one equation is also the basic equation of what you will learn next semester in integral calculus. All you have to do is turn it upside down, in a way. This equation is:

| y’ = nxn-1 |

This is the magic equation. What you will not be told is that this magic equation was not invented by either Newton or Leibniz. All they did is invent two similar derivations of it. Both of them knew the equation worked, and they wanted to put a foundation under it. They wanted to understand where it came from and why it worked,but they failed and everyone else since has failed. The reason they failed is that the equation was used historically to find tangents to curves, and everyone all the way back to the ancient Greeks had tried to solve this problem by using a magnifying glass.

What is meant by this is that for millennia, the accepted way to approach the problem and the math was to try to straighten out the curve at a point. If you could straighten out the curve at that point you would have the tangent at that point. The ancient Greeks had the novel idea of looking at smaller and smaller segments of the curve, closer and closer to the point in question. The smaller the segment, the less it curved. Rather than use a real curve and a real magnifying glass, the Greeks just imagined the segment shrinking down. This is where we come to the diminishing differential. Remember that MM said the differential was a length. Well, the Greeks assigned that differential to the length of the segment, and then imagined it getting smaller and smaller.

Two thousand years later, nothing had changed. Newton and Leibniz were still thinking the same way. Instead of saying the segment was “getting smaller” they said it was “approaching zero”. That is why we now use the little arrow and the zero. Newton even made tables, kind of like MM will make below. He made tables of diminishing differentials and was able to pull the magic equation from these tables.

The problem is that he and everyone else has used the wrong tables. You can pull the magic equation from a huge number of possible tables, and in each case the equation will be true and in each case the table will “prove” or support the equation. But in only one table will it be clear why the equation is true. Only one table will be simple enough and direct enough to show a 16-year-old where the magic equation comes from. Only one table will cause everyone to gasp and say, “Aha, now I understand.”

Newton and Leibniz never discovered that table, and no one since has discovered it. All their tables were too complex by far. Their tables required you to make very complex operations on the numbers or variables or functions. In fact, these operations were so complex that even Newton and Leibniz got lost in them. As will be shown after unveiling the table, Newton and Leibniz were forced to perform operations on their variables that were actually false.

Getting the magic equation from a table of diminishing differentials is so complex and difficult that no one has ever been able to do it without making a hash of it. It can be done, but it is not worth doing. If you can pull the magic equation from a simple table of integers, why try to pull it from a complex table of functions with strange and confusing scripts? Why teach calculus as a big hazy mystery, invoking infinite series or approaches to 0’s or infinitesimals, when you can teach it at a level that is no more complex than 1+1=2?

So here is the lesson that will teach you differential calculus in one day, in one paper. If you have reached this level of math, the only thing that should look strange to you in the magic equation is the y’. You know what an exponent is, and you should know that you can write an exponent as (n-1) if you want to. That is just an expansion of a single number into a differential, as was taught above. If n=2, for instance, then the exponent just equals 1, in that case. Beyond that, “n” is just another variable. It could be “z” or “a” or anything else. That variable just generalizes the equation for us, so that it applies to all possible exponents.

All that is just simple algebra. But you do not normally have primed variables in high school algebra. What does the prime signify? That prime is telling you that y is a different sort of variable than x. When you apply this magic equation to physics, x is usually a distance and y is a velocity. A variable could also be an acceleration, or it could be a point, or it could be just about anything. But we need a way to remind ourselves that some variables are one kind of parameter and some variables are another. So we use primes or double primes and so on.

This is important, because it means that mathematically, a velocity is not a distance, and an acceleration is not a velocity. They have to be kept separate. A calculus equation takes you from one sort of variable to another sort. You cannot have a distance on both sides of the magic equation, or a velocity on both sides. If x is a distance, y’ cannot be a distance, too.

Some people will try to convince you later that calculus can be completely divorced from physics, or from the real world. They will stress that calculus is pure math, and that you do not need to think of distances or velocities or physical parameters. But if this were true, we would not need to keep our variables separate. We would not need to keep track of primed variables, or later double-primed variables and so on.

Variables in calculus do not just stand for numbers, they stand for different sorts of numbers, as you see. In pure math, there are not different sorts of numbers, beyond ordinal and cardinal, or rational and irrational, or things like that. In pure math, a counting integer is a counting integer and that is all there is to it. But in calculus, our variables are counting different things and we have to keep track of this. That is what the primes are for.

What, you may ask, is the difference between a length and a velocity? You can probably answer that without the calculus, and probably without outside help. To measure a length you do not need a watch. To measure velocity, you do. Velocity has a “t” in the denominator, which makes it a rate of change. A rate is just a ratio, and a ratio is just one number over another number, with a slash in between. Basically, you hold one variable steady and see how the other variable changes relative to it. With velocity, you hold time steady (all the ticks are the same length) and see how distance changes during that time. You put the variable you know more about (it is steady) in the denominator and the variable you are seeking information about (you are measuring it) in the numerator. Or, you put the defined variable in the denominator (time is defined as steady) and the undefined variable in the numerator (distance is not known until it is measured).

All this can also be applied to velocity and acceleration. The magic equation can be applied to velocity and acceleration, too. If x is a velocity, then y’ is an acceleration. This is because acceleration is the rate of change of the velocity. Acceleration is v/t. So you can see that y’ is always the rate of change of x. Or, y’ is always x/t. This is another reason that calculus cannot really be divorced completely from physics. Time is a physical thing. A pure mathematician can say, “Well, we can say that y’ is always x/z, where z is not time but just a pure variable.” But in that case, x/z is still a rate of change. You can refuse to call “z” a time variable, but you still have the concept of change. A pure number changing still implies time passing, since nothing can change without time passing. Mathematicians want “change” without “time”, but change is time. If a mathematician can imagine or propose change without time, then he is cleverer than the gods by half, since he has just separated a word from its definition. (See A Revaluation of Time and Velocity and the Concept of Relativity.)

At any rate, you are already in a better position to understand the calculus than any math student in history. Whether you like that little diversion into time and change is really beside the point, since even if you believe in pure math it does not effect my argument.

All the famous mathematicians in history have studied the curve in order to study rate of change. To develop the calculus, they have taken some length of some curve and then let that length diminish. They have studied the diminishing differential, the differential approaching zero. This approach to zero gives them an infinite series of differentials, and they apply a method to the series in order to understand its regression.

But it is much more useful to notice that curves always concern exponents. Curves are all about exponents, and so is the calculus. So what MM did is study integers and exponents, in the simplest situations. He started by letting z equal some point. If he let a variable stand for a point, then MM had to have a different sort of variable stand for a length, so that MM did not confuse a point and a length. The normal way to do this is to let a length be Δz (read “change in z”). MM wanted lengths instead of points, since points cannot be differentials. Lengths can. You cannot think of a point as (x-y). But if x and y are both points, then (x-y) will be a length, you see.

The Algorithm

In the first line of his table, MM lists the possible integer values of Δz. You can see that this is just a list of the integers, of course. Next MM lists some integer values for other exponents of Δz. This is also straightforward. At line 7), I begin to look at the differentials of the previous six lines. In line 7, MM is studying line 1, and he is just subtracting each number from the next. Another way of saying it is that MM is looking at the rate of change along line 1. Line 9 lists the differentials of line 3. Line 14 lists the differentials of line 9. You should be able to follow the logic on this, so go down to the table below.

With a tight definition of a rate of change, our variable assignments clearly and unambiguously set, and the necessary understanding of the number line and the graph; it is possible to solve any calculus problem without infinite series or limits. All that is necessary is this beautiful table made up by the author, possibly for the first time. (All the math books of history do not have this table, although it may be buried out there in some library. It will save every student of high school calculus from insolvable mysteries of calculus.)

|

1 ) Δz= 1, 2, 3, 4, 5, 6, 7, 8, 9... 2 ) Δ2z = 2, 4, 6, 8, 10, 12, 14, 16, 18... 3 ) Δz2 = 1, 4, 9, 16, 25, 36, 49, 64, 81... 4 ) Δz3 = 1, 8, 27, 64, 125, 216, 343... 5 ) Δz4 = 1, 16, 81, 256, 625, 1296... 6 ) Δz5 = 1, 32, 243, 1024, 3125, 7776, 16807 7 ) ΔΔz = 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1 8 ) ΔΔ2z = 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2 9 ) ΔΔz2 = 1, 3, 5, 7, 9, 11, 13, 15, 17, 19 10) ΔΔz3 = 1, 7, 19, 37, 61, 91, 127 11 ΔΔz4 = 1, 15, 65, 175, 369, 671 12) ΔΔz5 = 1, 31, 211, 781, 2101, 4651, 9031 13) ΔΔΔz = 0, 0, 0, 0, 0, 0, 0 14) ΔΔΔz2 = 2, 2, 2, 2, 2, 2, 2, 2, 2, 2 15) ΔΔΔz3 = 6, 12, 18, 24, 30, 36, 42 16) ΔΔΔz4 = 14, 50, 110, 194, 302 17) ΔΔΔz5 = 30, 180, 570, 1320, 2550, 4380 18) ΔΔΔΔz3 = 6, 6, 6, 6, 6, 6, 6, 6 19) ΔΔΔΔz4 = 36, 60, 84, 108 20) ΔΔΔΔz5 = 150, 390, 750, 1230, 1830 21) ΔΔΔΔΔz4 = 24, 24, 24, 24 22) ΔΔΔΔΔz5 = 240, 360, 480, 600 23) ΔΔΔΔΔΔz5 = 120, 120, 120 24) ΔΔΔΔΔΔΔz6 = 720, 720, 720 And so on |

Again, this is what you call simple number analysis. It is a table of differentials. The first line is a list of the potential integer lengths of an object, and a length is a differential. It is also a list of the cardinal integers. It is also a list of the possible values for the number of boxes we could count in our graph. It is therefore both physical and abstract, so that it may be applied in any sense one wants. Line 2 lists the potential lengths or box values of the variable Δ2z. Line 3 lists the possible box values for Δz². Line seven begins the second-degree differentials. It lists the differentials of line 1, as you see. To find differentials, One simply subtracts each number from the next. Line eight lists the differentials of line 2, and so on. Line 14 lists the differentials of line 9. The logic of the rest should be clear.

Now let us pull out the important lines and relist them in order:

|

7 ) ΔΔz

= 1, 1, 1, 1, 1, 1, 1 14) ΔΔΔz2 = 2, 2, 2, 2, 2, 2, 2 18) ΔΔΔΔz3 = 6, 6, 6, 6, 6, 6, 6 21) ΔΔΔΔΔz4 = 24, 24, 24, 24 23) ΔΔΔΔΔΔz5 = 120, 120, 120 24) ΔΔΔΔΔΔΔz6 = 720, 720, 720 |

Looking carefully at these we see that

2ΔΔz = ΔΔΔz2

{2 times (ΔΔz = 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1) = ΔΔΔz2 = 2, 2, 2, 2, 2, 2, 2, 2, 2, 2}

3ΔΔΔz2 = ΔΔΔΔz3

{3 times (ΔΔΔz2 = 2, 2, 2, 2, 2, 2, 2, 2, 2, 2 = ΔΔΔΔz3 = 6, 6, 6, 6, 6, 6, 6, 6}

4ΔΔΔΔz3 = ΔΔΔΔΔz4

5ΔΔΔΔΔz4 = ΔΔΔΔΔΔz5

6ΔΔΔΔΔΔz5 = ΔΔΔΔΔΔΔz6

and

so on.

All these equations are equivalent to the magic equation:

| y’ = nxn-1 |

In any of those equations, all we have to do is let x equal the right side and y’ equal the left side. No matter what exponents we use, the equation will always resolve into our magic equation.

Since in those last equations we have z on both sides, we can cancel a lot of those deltas and get down to this:

|

2z = Δz2 3z2 = Δz3 4z3 = Δz4 5z4 = Δz5 6z5 = Δz6 |

Now, if we reverse it:

|

Δz2 = 2z “the rate of change of z squared is two times z.” Δz3 = 3z2 “the rate of change of z cubed is 3 times z squared.” Δz4 = 4z3 “the rate of change of z to the 4th power is 4 times z cubed.” Δz5 = 5z4 “the rate of change of z to the 5th power is 5 times z to the 4th power .” Δz6 = 6z5 “the rate of change of z to the 6th power is 6 times z to the 5th power .” |

That is information that we just got from a table, and that table just listed numbers. Simple differentials. One number subtracted from the next.

This is useful to us because it is precisely what we were looking for when we wanted to learn calculus. We use the calculus to tell us what the rate of change is for any given variable and exponent. Given an x, we seek a y’, where y’ is the rate of change of x. And that is what we just found. Currently, calculus calls y’ the derivative, but that is just fancy terminology that does not really mean anything. It just confuses people for no reason. The fact is, y’ is a rate of change, and it is better to remember that at all times.

You may still have one very important question. You will say, “I see where the numbers are coming from, but what does it mean? Why are we selecting the lines in the table where the numbers are constant?” We are going to those lines, because in those lines we have flattened out the curve. If the numbers are all the same, then we are dealing with a straight line. A constant differential describes a straight line instead of a curve. We have dug down to that level of change that is constant, beneath all our other changes. As you can see, in the equations with a lot of deltas, we have a change of a change of a change. . . . We just keep going down to sub-changes until we find one that is constant. That one will be the tangent to the curve.

If we want

to find the rate of change of the exponent 6 at line 24) ΔΔΔΔΔΔΔz6 = 720, 720, 720, for instance,

we

only have to dig down 7 sub-changes to line 18) ΔΔΔΔz3 = 6, 6, 6, 6, 6, 6, 6, 6.

We do not have to approach zero at all.

In a way we have done the same thing that the Greeks were doing and that Newton was doing. We have flattened out the curve. But we did not use a magnifying glass to do it. We did not go to a point, or get smaller and smaller. We went to sub-changes, which are a bit smaller, but they are not anywhere near zero. In fact, to get to zero, you would have to have an infinite number of deltas, or sub-changes. And this means that your exponent would have to be infinity itself. Calculus never deals with infinite exponents, so there is never any conceivable reason to go to zero. We do not need to concern ourselves with points at all. Nor do we need to talk of infinitesimals or limits. We don't have an infinite series, and we don't have any vanishing terms. We have a definite and limited series, one that is 7 terms long with the exponent 6 and only 3 terms long with the exponent 2.

Hopefully, you can see that the magic equation is just a generalization of all the constant differential equations we pulled from the table. To “invent” the calculus, we do not have to derive the magic equation at all. All we have to do is generalize a bunch of specific equations that are given us by the table. By that it is meant that the magic equation is just an equation that applies to all similar situations, whereas the specific equations only apply to specific situations (as when the exponent is 2 or 3, for example). By using the further variable “n”, we are able to apply the equation to all exponents. Like this:

| nzn-1 = Δzn |

And we do not have to prove or derive the table either. The table is true by definition. Given the definition of integer and exponent, the table follows. The table is axiomatic number analysis of the simplest kind. In this way it has been shown that the basic equation of differential calculus falls out of simple number relationships like an apple falls from a tree.

Lagrange claimed that the Taylor series was the secret engine behind the calculus, but this chart is the secret engine behind both the Taylor series and the calculus. It is unlikely that the Greeks were concealing any algorithms or other devices, but if they were this is the algorithm they were likely concealing. If Archimedes was aware of this chart, he would not have continued to pursue his solutions with infinite series.

The calculus works only because the equations of the calculus work. The equation y’ = nxn-1 and the other equations of the calculus are the primary operational facts of the mathematics, not the proofs of Newton or Leibniz or Cauchy. Newton’s and Leibniz’s most important recognition was that these generalized equations were the most needful things, and that they must be achieved by whatever means necessary. The means available to them in the late 17th century was a proof using infinitesimals. A slightly finessed proof yielded results that far outweighed any philosophical cavils, and this proof has stood ever since. But what the calculus is really doing when it claims to look at diminishing differentials and limits is take information from this chart. This chart and the number relations it clearly reveals are the foundations of the equations of the calculus, not infinite series or limits.

To put it in even balder terms, the equalities listed above may be used to solve curve equations. By “solve” it is meant that the equalities listed in this chart are substituted into curve equations in order to give us information we could not otherwise get. Rate of change problems are thereby solved by a simple substitution, rather than by a complex proof involving infinities and limits. A curve equation tells us that one variable is changing at a rate equal to the rate that another variable (to some exponent) is changing. The chart above tells us the same thing, but in it the same variable is on both sides of the equation. So obviously all we have to do is substitute in the correct way and we have solved our equation. We have taken information from the chart and put it into the curve equation, yielding new information. It is really that simple. The only questions to ask are, "What information does the chart really contain?" And, "What information does it yield after substitution into a curve equation?"

Δz is defined as a linear distance from zero on the graph, in the x-direction (if the word "distance" has too much physical baggage for you, you may substitute "change from zero"). ΔΔz is then the change of Δz, and so on. Since ΔΔx/ΔΔt is a velocity, ΔΔΔz is sort of constant acceleration, waiting to be calculated (given a ΔΔt). In that sense, ΔΔΔΔz is a variable acceleration waiting to be calculated. ΔΔΔΔΔz is a change of a variable acceleration, and ΔΔΔΔΔΔz is a change of a change of a variable acceleration. Some may ask, "Do these kinds of accelerations really exist? They boggle the mind. How can things be changing so fast?" High exponent variables tell us that we are dealing with these kinds of accelerations, whether they exist in physical situations or not. The fact is that complex accelerations do exist in real life, but this is not the place to discuss it. Most people can imagine a variable acceleration, but get lost beyond that. Obviously, in strictly mathematical situations, changes can go on changing to infinity.

In the previous paragraph it has been shown that velocity is ΔΔx/ΔΔt, which it must be, rather than current notation that has one less delta at each point. Current notation assumes that curve-equation variables are naked variables: x, t. but really they are delta variables, Δx, Δt. Current theory says that velocity is a change of these variables, therefore velocity must be ΔΔx/ΔΔt.

The objection might be that this implies that velocity is not distance over time, but by definition velocity is change in distance over change in time." Precisely.

For example: say that a person is sitting at the number 3 on a big ruler. The number three is telling the world that the person three inches from the end. It is giving a distance. Now, can I use that distance to calculate a velocity? How? the person is sitting there not moving. There is no velocity involved, so it would be ridiculous to calculate one. To calculate a velocity, one needs have a velocity, in which case a person must move from one number mark on the ruler to another one. In which case there is a change in distance!

Another objection could be the case when a person was at the origin to begin with? Then the distance and the change in distance are the same thing.” They would be the same number, but mathematically the calculation would still involve a subtraction. If one were writing out the whole thing, it would always be implied that ΔΔz = Δz(final) - Δz(initial) = Δz(final) - 0. The final number would be the same number, and the magnitude would be the same, but conceptually it is not the same. Δz and ΔΔz are both measured in meters, say, but they are not the same conceptually.

One way to clear up part of this confusion is to distinguish between length and distance. In physics, they are often used interchangeably. In the rate of change problems, more clarity is necessary, thus by assigning one word exclusively to one situation, and the other word to the other situation such as assigning length to Δz and distance to ΔΔz. A cardinal number represents a length from zero. It is the extension between two static points, but no movement is implied. One would certainly have to move to go from one to the other, but a length implies no time variable, no change in time. A length can exist in the absence of time. A distance, however, cannot. A distance implies the presence of another variable, even if that variable is not a physical variable like time. For instance, to actually travel from one point to another requires time. Distance implies movement, or it implies a second-degree change. A length is a static change in x. A distance is a movement from one x to the other.

Even pure mathematicians can have nothing to say against my table, since it has no necessary physical content. MM calls his initial differentials: lengths, but that is to suit himself. You can subtract all the physical content out of my table and it is still the same table and still completely valid.

We do not need to consider any infinite series, we do not need to analyze differentials approaching zero in any strange way, we do not need to think about infinitesimals, we do not need to concern ourselves with functions, we do not need to learn weird notations with arrows pointing to zeros underneath functions, and we do not need to notated functions with parentheses and little “f’s”, as in f(x). But the most important thing we can ditch is the current flawed derivations of the magic equation

Newton's flawed derivation

The current derivation of the magic equation similar to Newton's derivation is a simplified form of Newton’s derivation, but conceptually it is exactly the same. Nothing important has changed in 350 years. This is the derivation you will be taught this semester. The figure δ stands for “a very small change”. It is the small-case Greek “d”, which is called delta. The large-case is Δ, remember, which is a capital delta. Sometimes the two are used interchangeably, and you may see the derivation below with Δ instead of δ. You may even see it with the letter “d”. Not need to discuss which representative character is better and why, since the question is now moot. After today we can ditch all three.

Anyway, we start by taking any functional equation. “Functional”

just means that y depends upon x in some way. Think of how a

velocity depends on a distance. To measure a velocity you need to

know a distance, so that velocity is a function of distance. But

distance is not a function of velocity, since you can measure a

distance without being concerned at all about velocity. So, we

take any functional equation, say

y = x2

Increase

it by δy and δx to obtain

y + δy = (x +

δx)2

subtract

the first equation from the second:

δy = (x + δx)2

- x2

= 2xδx + δx2

divide

by δx

δy /δx = 2x + δx

Let δx

go to zero (only on the right side, of course)

δy / δx

= 2x

y’ = 2x

That is how they currently derive the magic equation. Any teenager, or any honest person, will look at that series of operations and go, “What the. . . ?” How can we justify all those seemingly arbitrary operations? The answer is, we cannot. As it turns out, precisely none of them are legal. But Newton used them, he was a very smart guy, and we get the equation we want at the end. So we still teach that derivation. We haven’t discovered anything better, so we just keep teaching that.

Let me run through the operations quickly, to show you what is going on. We only have four operations, so it is not that difficult, really. Historically, only the last operation has caused people to have major headaches. Newton was called on the carpet for it soon after he published it, by a clever bishop named Berkeley. Berkeley did not like the fact that δx went to zero only on the right side. But no one could sort through it one way or the other and in a few decades everyone just decided to move on. They accepted the final equation because it worked and swept the rest under the rug.

But what MM will show you is that the derivation is lost long before the last operation. That last operation is indeed a big cheat, but mathematicians have put so many coats of pretty paint on it that it is impossible to make them look at it clearly anymore. They answer that δx is part of a ratio on the left side, and because of that it is sort of glued to the δy above it. They say that δy/δx must be considered as one entity, and they say that this means it is somehow unaffected by taking δx to zero on the right side. That is math by wishful thinking, but what are you going to do?

To get them to stand up and take notice, MM has been forced to show them the even bigger cheats in the previous steps. Amazingly, no one in all of history has noticed these bigger cheats, not even that clever bishop. So let us go through all the steps.

In the first equation, the variables stand for either “all possible points on the curve” or “any possible point on the curve.” The equation is true for all points and any point. Let us take the latter definition, since the former does not allow us any room to play.

So, in the first equation, we are at “any point on the curve”.

In the second equation, are we still at any point on the same curve? Some will think that (y + δy) and (x + δx) are the co-ordinates of another any-point on the curve—this any-point being some distance further along the curve than the first any-point. But a closer examination will show that the second curve equation is not the same as the first. The any-point expressed by the second equation is not on the curve y = x2. In fact, it must be exactly δy off that first curve. Since this is true, we must ask why we would want to subtract the first equation from the second equation. Why do we want to subtract an any-point on a curve from an any-point off that curve?

Furthermore, in going from equation 1 to equation 2, we have added different amounts to each side. This is not normally allowed. Notice that we have added δy to the left side and 2xδx + δx2 to the right side. This might have been justified by some argument if it gave us two any-points on the same curve, but it does not. We have completed an illegal operation for no apparent reason.

Now we subtract the first any-point from the second any-point. What do we get? Well, we should get a third any-point. What is the co-ordinate of this third any-point? It is impossible to say, since we got rid of the variable y. A co-ordinate is in the form (x,y) but we just subtracted away y. You must see that δy is not the same as y, so who knows if we are off the curve or on it. Since we subtracted a point on the first curve from a point off that curve, we would be very lucky to have landed back on the first curve. But it does not matter, since we are subtracting points from points. Subtracting points from points is illegal anyway. If you want to get a length or a differential you must subtract a length from a length or a differential from a differential. Subtracting a point from a point will only give you some sort of zero—another point. But we want δy to stand for a length or differential in the third equation, so that we can divide it by δx. As the derivation now stands, δy must be a point in the third equation.

Yes, δy is now a point. It is not a change-in-y in the sense that the calculus wants it to be. It is no longer the difference in two points on the curve. It is not a differential! Nor is it an increment or interval of any kind. It is not a length, it is a point. What can it possibly mean for an any-point to approach zero? The truth is it does not mean anything. A point cannot approach a zero length since a point is already a zero length.

Look at the second equation again. The variable y stands for a point, but the variable δy stands for a length or an interval. But if y is a point in the second equation, then δy must be a point in the third equation. This makes dividing by δx in the next step a logical and mathematical impossibility. You cannot divide a point by any quantity whatsoever, since a point is indivisible by definition. The final step—letting δx go to zero—cannot be defended whether you are taking only taking the denominator on the left side to zero or whether you are taking the whole fraction toward zero (which has been the claim of most). The ratio δy/δx was already compromised in the previous step. The problem is not that the denominator is zero; the problem is that the numerator is a point. The numerator is zero.

MM's Construction Methods

MM's new method drives right around this mess by dispensing with points altogether. You can see that the big problem in the current derivation is in trying to subtract one point from another. But you cannot subtract one point from another, since each point acts like a zero. Every point has zero extension in every direction. If you subtract zero from zero you can only get zero.

You will say that MM subtracted one point from another above (x-y) and got a length, but that is only because he treated each variable as a length to start with. Each “point” on a ruler or curve is actually a length from zero, or from the end of the ruler. Go to the “point” 5 on the ruler. Is that number 5 really a point? No, it is a length. The number 5 is telling you that you are five inches from the end of the ruler. The number 5 belongs to the length, not the point. Which means that the variable x, that may stand for 5 or any other number on the ruler, actually stands for a length, not a point. This is true for curves as well as straight lines or rulers. Every curve is like a curved ruler, so that all the numbers at “points” on the curve are actually lengths.

You may say, “Well, do not current mathematicians know that? Doesn’t the calculus take that into account? Can’t you just go back into the derivation above and say that y is a length from zero instead of a point, which means that in the third equation δy is a length, which means that the derivation is saved?” Unfortunately, no. You cannot say any of those things, since none of them are true. The calculus currently believes that y’ is an instantaneous velocity, which is a velocity at a point and at an instant. You will be taught that the point y is really a point in space, with no time extension or length. Mathematicians believe that the calculus curve is made up of spatial points, and physicists of all kinds believe it, too. That is why my criticism is so important, and why it cannot be squirmed out of. The variable y is not a length in the first equation of the derivation, and this forces δy to be a point in the third equation.

A differential stands for a length only if the two terms in the differential are already lengths. They must both have extension. Five inches minus four inches is one inch. Everything in that sentence is a length. But the fifth-inch mark minus the fourth-inch mark is not the one inch-mark, nor is it the length one inch. A point minus a point is a meaningless operation. It is like 0 – 0.

This is the reason MM was careful to build my table only with lengths and not points. This is because MM discovered that you cannot assign numbers to points. If you cannot assign numbers to points, then you cannot assign variables or functions to points. When building his table above, MM kind of blew past this fact, since he did not want to confuse you with too much theory. MM's table is all lengths, but he did not really tell you why it had to be like that. Now, you should be able to see that points cannot really enter equations or tables at all.

Only ordinal numbers can be applied to points. These are ordinal numbers: 1st, 2nd, 3rd. The fifth point, the eighth point, and so on. But math equations apply to cardinal or counting numbers, 1, 2, 3. You cannot apply a counting number to a point. As MM showed with the ruler, any time you apply a counting number to a “point” on the ruler, that number attaches to the length, not the point. The number 5 means five inches, and that is a length from zero or from the end of the ruler. It is the same with all lines and curves. And this applies to pure math as well as to applied math. Even if your lines and curves are abstract, everything MM says here still applies in full force. The only difference is that you no longer call differentials lengths; you call them intervals or differentials or something.

Some might say that one could go back and redefine all the points as lengths, in the existing derivation, but you cannot. MM has shown you that Newton cheated on all four steps, not just the last one. You cannot “derive” his last equation from his first by applying a series of mathematical operations to them like this, and what is more you do not need to. MM has showed with my table that you do not need to derive the magic equation since it just drops out of the definition of exponent fully formed. The equation is axiomatic. What MM means by this is that it really is precisely like the equation 1+1=2. You do not need to derive the equation 1+1=2, or prove it. You can just pull it from a table of apples or oranges and generalize it. It is definitional. It is part of the definition of number and equality. In the same way, the magic equation is a direct definitional outcome of number, equality, and exponent. Build a simple table and the equation drops out of it without any work at all.

If you must have a derivation, the simplest possible one is this one:

We are given a functional

equation of the general sort

y = xn

and we seek y’, where,

by definition

y’ = Δxn

Then we go to our generalized

equation from the table, which is

nxn-1

= Δxn

By substitution, we get

y’

= nxn-1

That’s all we need. But MM will give you one other piece of information that will come in handy later. Remember how we cancelled all those deltas, to simplify the first equations coming out of the table? Well, we did that just to make things look tidier, and to make the equations look like the current calculus equations. But those deltas are really always there. You can cancel them if you want to clean up your math, but when you want to know what is going on physically, you have to put them back in. What they tell you is that when you are dealing with big exponents, you are dealing with very complex accelerations. Once you get past the exponent two, you aren’t dealing with lengths or velocities anymore. The variable x to the exponent 6 will have 7 deltas in front of it, as you can see by going back to the table. That is a very high degree of acceleration. Three deltas is a velocity. Four is an acceleration. Five is a variable acceleration. Six is a change of a variable acceleration. And so on. Most people cannot really visualize anything beyond a variable acceleration, but high exponent variables do exist in nature, which means that you can go on changing changes for quite a while. If you go into physics or engineering, this knowledge may be useful to you. A lot of physicists appear to have forgotten that accelerations are often variable to high degrees. They assume that every acceleration in nature is a simple acceleration.

Introduction

In this paper will prove that the invention of the calculus using infinite series and its subsequent interpretation using limits were both errors in analyzing the given problems. In fact, as MM will show, they were both based on the same conceptual error: that of applying diminishing differentials to a mathematical curve (a curve as drawn on a graph). In this way MM will bypass and ultimately falsify both standard and nonstandard analysis.

There is no doubt that the current notation and the current method of teaching calculus is a gigantic mess. When this treatise shown here finally become adopted by mathematical world, educated people will look back and wonder how calculus could exist, and be taught, in such a confusing manner. They will wonder how such basic math, so easily understood, could have remained in a halfway state for so many centuries. The current notation and derivation for the equations of calculus will look to them like the leeches that doctors used to put on patients, as an all-round cure, or like the holes they drilled in the head to cure headache. Many students have felt that learning calculus is like having holes drilled in their heads, and these treatise will show that they were right to feel that way.

After reading this treatise, the reader will understand that no one understands or ever understood calculus, not Einstein, not Cauchy, not Cantor, not Russell, not Bohr, not Feynman, no one. Not even Leibniz or Newton understood it. How this has continued to happen even though the 20th century and into the 21th is due to the philosophy put forward by Heisenberg and Bohr back in the early 1900’s that physics and math are not understandable, in the normal way. This was paraphrased best by Feynman who said "Shut up and calculate." The Copenhagen Interpretation of quantum dynamics says that the unexplainable principles are true because they work. This applies to Relativity also, in which the paradoxes must simply be accepted, whether they make sense or not.

In the first semester of calculus, the student will learn differential calculus. The amazing thing is that the student will probably make it to the end of the semester without ever being told what a differential is. Most mathematicians learn that differential calculus is about solving certain sorts of problems using a derivative, and later courses called “differential equations” are about solving more difficult problems in the same basic way. But most never think about what a differential is, outside of calculus.

The calculus shown in this treatise is based on a constant differential instead of a diminishing differential, but this does not compute because students have learned that in calculus the differentials are always getting smaller.

A differential is one number subtracted from another number: (2-1) is a differential. So is (x-y). A “differential” is just a fancier term for a “difference”. A differential is written as two terms and a minus sign, but as a whole, a differential stands for one number. The differential (2-1) is obviously just 1, for example. So you can see that a differential is a useful expansion. It is one number written in a longer form. You can write any number as a differential. The number five can be written as (8-3), or in a multitude of other ways. We may want to write a single number as a differential because it allows us to define that differential as some useful physical parameter. For instance, a differential is most often a length. Say you have a ruler. Go to the 2-inch mark. Now go to the 1-inch mark. What is the difference between the two marks? It is one inch, which is a length. (2-1) may be a length. (x-y) may also be a length. In pure math, we have no lengths, of course, but in math applied to physics, a differential is very often a length.

There is basically only one equation that you learn in your first semester of calculus. All the other equations are just variations and expansions of the one equation. This one equation is also the basic equation of what you will learn next semester in integral calculus. All you have to do is turn it upside down, in a way. This equation is

| y’ = nxn-1 |

This is the magic equation. What you won't be told is that this magic equation was not invented by either Newton or Leibniz. All they did is invent two similar derivations of it. Both of them knew the equation worked, and they wanted to put a foundation under it. They wanted to understand where it came from and why it worked. But they failed and everyone else since has failed. The reason they failed is that the equation was used historically to find tangents to curves, and everyone all the way back to the ancient Greeks had tried to solve this problem by using a magnifying glass.

This means that for millennia, the accepted way to approach the problem and the math was to try to straighten out the curve at a point. If you could straighten out the curve at that point you would have the tangent at that point. The ancient Greeks had the novel idea of looking at smaller and smaller segments of the curve, closer and closer to the point in question. The smaller the segment, the less it curved. Rather than use a real curve and a real magnifying glass, the Greeks just imagined the segment shrinking down. This is where we come to the diminishing differential. The Greeks assigned that differential to the length of the segment, and then imagined it getting smaller and smaller.

Two thousand years later, nothing had changed. Newton and Leibniz were still thinking the same way. Instead of saying the segment was "getting smaller"they said it was "approaching zero";. That is why we now use the little arrow and the zero. Newton even made tables, kind of like MM will make below. He made tables of diminishing differentials and was able to pull the magic equation from these tables.

The problem is that he and everyone else has used the wrong tables. You can pull the magic equation from a huge number of possible tables, and in each case the equation will be true and in each case the table will "prove" or support the equation. But in only one table will it be clear why the equation is true. Only one table will be simple enough and direct enough to show a 16-year-old where the magic equation comes from. Only one table will cause everyone to gasp and say, "Aha, now I understand." Newton and Leibniz never discovered that table, and no one since has discovered it. All their tables were too complex by far. Their tables required you to make very complex operations on the numbers or variables or functions. In fact, these operations were so complex that even Newton and Leibniz got lost in them.

Newton and Leibniz were forced to perform operations on their variables that were actually false. Getting the magic equation from a table of diminishing differentials is so complex and difficult that no one has ever been able to do it without making a hash of it. It can be done, but it isn't worth doing. If you can pull the magic equation from a simple table of integers, why try to pull it from a complex table of functions with strange and confusing scripts? Why teach calculus as a big hazy mystery, invoking infinite series or approaches to 0’s or infinitesimals, when you can teach it at a level that is no more complex than 1+1=2?

The nest of historical errors here are not just a nest of semantics, metaphysics, or failed definitions or methods. It is also an error in finding solutions such as this link on the Miles Mathis site: The Derivatives of the Natural Log and of 1/X Are Wrong.

The redefinition of the derivative will also undercut the basic assumptions of all current topologies, including symplectic topology—which depends on the traditional definition in its use of points in phase space. Likewise, linear and vector algebra and the tensor calculus will be affected foundationally by my re-definition, since the current mathematics will be shown to be inaccurate representations of the various spaces or fields they hope to express. All representations of vector spaces, whether they are abstract or physical, real or complex, composed of whatever combination of scalars, vectors, quaternions, or tensors will be influenced, since it will be shown that all mathematical spaces based on Euclid, Newton, Cauchy, and the current definition of the point, line, and derivative are necessarily at least one dimension away from physical space. That is to say that the variables or functions in all current mathematics are interacting in spaces that are mathematical spaces, and these mathematical spaces (all of them) do not represent physical space.

This is not a philosophical contention. The thesis here is not that there is some metaphysical disconnection between mathematics and reality. This thesis, proved mathematically below, is that the historical and currently accepted definitions of mathematical points, lines, and derivatives are all false for the same basic reason, and that this falsifies every mathematical space. In the correcting the definitions, it also corrects calculus, topology, linear and vector algebra, and the tensor (among many other things). In this way the problem is solved once and for all, and there need be no talk of metaphysics, formalisms or other esoterica.

The problem by the simplest method possible, without recourse to any of the mathematical systems that are critiqued. It will not require any math beyond elementary number analysis, basic geometry and simple logic. This is done pointedly, since the fundamental nature of the problem, and its status as the oldest standing problem in mathematics, has made it clearly unresponsive to other more abstract analysis. The problem has not only defied solution; it has defied detection. Therefore an analysis of the foundation must be done at ground level: any use of higher mathematics would be begging the question. This has the added benefit of making this paper comprehensible to any patient reader. Anyone who has ever taken calculus (even those who may have failed it) will be able to follow my arguments. Professional mathematicians may find this annoying for various reasons, but they are asked to be gracious. For they too may find that a different analysis at a different pace in a different “language” will yield new and useful mathematical results.

The end product of my proof will be a re-derivation of the core equation of the differential calculus, by a method that uses no infinite series and no limit concept. The integral will not be derived in this paper, but the new algorithm provided here makes it easy to do so, and no one will be in doubt that the entire calculus has been re-established on firmer ground.

It may also be of interest to many that this shown here method shows, in the simplest possible manner, why umbral calculus has always worked. Much formal work has been done on the umbral calculus since 1970; but, although the various equations and techniques of the umbral calculus have been connected and extended, they have never yet been fully grounded. My re-invention and re-interpretation of the Calculus of Finite Differences allows me to show—by lifting a single curtain—why subscripts act exactly like exponents in many situations.

Finally, and perhaps most importantly, this reinvention and re-interpretation of the Calculus of Finite Differences solves many of the point-particle problems of QED without renormalization. The equations of QED have required renormalization only because they had first been de-normalized by the current maths, all of which are based upon what can be termed as Infinite Calculus. The current interpretation of calculus allows for the calculation of instantaneous velocities and accelerations, and this is caused both by allowing functions to apply to points and by using infinite series to approach points in analyzing the curve. By returning to the Finite Calculus—and by jettisoning the point from applied math— the way is pointed to clean up QED. By making every variable or function a defined interval, every field and space cna be redefined, and in doing so dispense with the need for most or all renormalization, including the primary raison d'etre of string theory.

Newton’s calculus evolved from charts he made himself from his power series, based on the binomial expansion. The binomial expansion was an infinite series expansion of a complex differential, using a fixed method. In trying to express the curve as an infinite series, he was following the main line of reasoning in the pre-calculus algorithms, all the way back to the ancient Greeks. More recently Descartes and Wallis had attacked the two main problems of the calculus—the tangent to the curve and the area of the quadrature—in an analogous way, and Newton’s method was a direct consequence of his readings of their papers. All these mathematicians were following the example of Archimedes, who had solved many of the problems of the calculus 1900 years earlier with a similar method based on summing or exhausting infinite series. However, Archimedes never derived either of the core equations of the calculus proper, the main one being in this paper, y’ = nxn-1.

This equation was derived by Leibniz and Newton almost simultaneously, if we are to believe their own accounts. Their methods, though slightly different in form, were nearly equivalent in theory, both being based on infinite series and differentials that approached zero. Leibniz tells us himself that the solution to the calculus dawned upon him while studying Pascal’s differential triangle. To solve the problem of the tangent this triangle must be made smaller and smaller.

Both Newton and Leibniz knew the answer to the problem of the tangent before they started, since the problem had been solved long before by Archimedes using the parallelogram of velocities. From this parallelogram came the idea of instantaneous velocity, and the 17th century mathematicians, especially Torricelli and Roberval, certainly took their belief in the instantaneous velocity from the Greeks. The Greeks, starting with the Peripatetics, had assumed that a point on a curve might act like a point in space. It could therefore be imagined to have a velocity. When the calculus was used almost two millennia later by Newton to find an instantaneous velocity—by assigning the derivative to it—he was simply following the example of the Greeks.

However, the Greeks had seemed to understand that their analytical devices were inferior to their synthetic methods, and they were even believed by many later mathematicians (like Wallis and Torricelli) to have concealed these devices. Whether or not this is true, it is certain that the Greeks never systematized any methods based on infinite series, infinitesimals, or limits. As this paper proves, they were right not to. The assumption that the point on the curve may be treated as a point in space is not correct, and the application of any infinite series to a curve is thereby an impossibility. Properly derived and analyzed, the derivative equation cannot yield an instantaneous velocity, since the curve always presupposes a subinterval that cannot approach zero; a subinterval that is, ultimately, always one.

Part One - The Groundwork

The groundwork analyzes at some length a number of simple concepts that have not received much attention in mathematical circles in recent years. Some of these concepts have not been discussed for centuries, perhaps because they are no longer considered sufficiently abstract or esoteric. One of these concepts is the cardinal number. Another is the cardinal (or natural) number line. A third is the assignment of variables to a curve. A fourth is the act of drawing a curve, and assigning an equation to it. Were these concepts still taught in school, they would be taught very early on, since they are quite elementary. As it is, they have mostly been taken for granted—one might say they have not been deemed worthy of serious consideration since the fall of Athens. Perhaps even then they were not taken seriously, since the Greeks also failed to understand the curve—as their use of an instantaneous velocity makes clear.

The most elementary concept that needs to be analyzed is the point. This is shown in the treatise in the left column "A Physical Point has No Dimensions".

Part Two - Historical Interlude

Taking a short break from this groundwork and returning to the history of the calculus for just a moment. Two mathematicians in history came nearest to recognizing the difference between the mathematical point and the physical point. You will think that Descartes must be one, since he invented the graph. But he is not. Although he did much important work in the field, his graph turned out to be the greatest obstruction in history to a true understanding of the problem MM has related here. Had he seen the operational significance of all diagrams, he would have discovered something truly basic. But he never analyzed the fields created by diagrams, his or any others. No, the first to flirt with the solution was Simon Stevin, the great Flemish mathematician from the late 16th century. He is the person most responsible for the modern definition of number, having boldly redefined the Greek definitions that had come to the “modern” age via Diophantus and Vieta. (General Physics, Douglas C. Giancoli, 1984) He showed the error in assigning the point to the “unit” or the number one; the point must be assigned to its analogous magnitude, which was zero. He proved that the point was indivisible precisely because it was zero. This correction to both geometry and arithmetic pointed Stevin in the direction of my solution here, but he never realized the operational import of the diagram in geometry. In refining the concepts of number and point, he did not see that both the Greeks and the moderns were in possession of two separate concepts of the point: the point in space and the point in diagrammatica.

John Wallis came even nearer this recognition. Following Stevin, he wrote extensively of the importance of the point as analogue to the nought. He also did very important work on the calculus, being perhaps the greatest influence on Newton. He was therefore in the best position historically to have discovered the disjunction of the two concepts of point. Unfortunately he continued to follow the strong current of the 17th century, which was dominated by the infinite series and the infinitesimal. After his student Newton created the current form of the calculus, mathematicians were no longer interested in the rigorous definitions of the Greeks. The increasing abstraction of mathematics made the ontological niceties of the ancients seem quaint, if not passé. The mathematical current since the 18th century has been strongly progressive. Many new fields have arisen, and studying foundations has not been in vogue. It therefore became less and less likely that anyone would notice the conceptual errors at the roots of the calculus. Mathematical outsiders like Bishop Berkeley in the early 18th century failed to find the basic errors (he found the effects but not the causes), and the successes of the new mathematics made further argument unpopular.

MM has so far critiqued the ability of the calculus to find instantaneous values. But we must remember that Newton invented it for that very purpose. In De Methodis, he proposes two problems to be solved. 1) “Given a length of the space continuously, to find the speed of motion at any time.” 2) “Given the speed of motion continuously, to find the length of space described at any time.” Obviously, the first is solved by what we now call differentiation and the second by integration. Over the last 350 years, the foundation of the calculus has evolved somewhat, but the questions it proposes to solve and the solutions have not. That is, we still think that these two questions make sense, and that it is sensible that we have found an answer for them

Question 1 concerns finding an instantaneous velocity, which is a velocity over a zero time interval. This is done all the time, up to this day. Question 2 is the mathematical inverse of question 1. Given the velocity, find the distance traveled over a zero time interval. This is no longer done, since the absurdity of it is clear. On the graph, or even in real life, a zero time interval is equal to a zero distance. There can be no distance traveled over a zero time interval, even less over a zero distance, and most people seem to understand this. Rather than take this as a problem, though, mathematicians and physicists have buried it. It is not even paraded about as a glorious paradox, like the paradoxes of Einstein. No, it is left in the closet, if it is remembered to exist al all.

As should already be clear from my exposition of the curve equation, Newton’s two problems are not in proper mathematical or logical form, and are thereby insoluble. This implies that any method that provides a solution must also be in improper form. If you find a method for deriving a number that does not exist, then your method is faulty. A method that yields an instantaneous velocity must be a suspect method. An equation derived from this method cannot be trusted until it is given a logical foundation. There is no distance over a zero distance; and, equally, there is no velocity over a zero interval.

Bishop Berkeley commented on the illogical qualities of Newton’s proofs soon after they were published (The Analyst, 1734). Ironically, Berkeley’s critiques of Newton mirrored Newton’s own critiques of Leibniz’s method. Newton said of Leibniz, “We have no idea of infinitely little quantities & therefore MM introduced fluxions into my method that it might proceed by finite quantities as much as possible.” And, “The summing up of indivisibles to compose an area or solid was never yet admitted into Geometry.”(Newton, Isaac, Mathematical Papers, 8: 597.)

This “using finite quantities as much as possible” is very nearly an admission of failure. Berkeley called Newton’s fluxions “ghosts of departed quantities” that were sometimes tiny increments, sometimes zeros. He complained that Newton’s method proceeded by a compensation of errors, and he was far from alone in this analysis. Many mathematicians of the time took Berkeley’s criticisms seriously. Later mathematicians who were much less vehement in their criticism, including Euler, Lagrange and Carnot, made use of the idea of a compensation of errors in attempting to correct the foundation of the calculus. So it would be unfair to dismiss Berkeley simply because he has ended up on the wrong side of history. However, Berkeley could not explain why the derived equation worked, and the usefulness of the equation ultimately outweighed any qualms that philosophers might have. Had Berkeley been able to derive the equation by clearly more logical means, his comments would undoubtedly have been treated with more respect by history. As it is, we have reached a time when quoting philosophers, and especially philosophers who were also bishops, is far from being a convincing method, and MM will not do more of it. Physicists and mathematicians weaned on the witticisms of Richard Feynman are unlikely to find Berkeley’s witticisms quite up-to-date.

MM takes this opportunity to point out, however, that my critique of Newton is of a categorically different kind than that of Berkeley, and of all philosophers who have complained of infinities in derivations. MM has not so far critiqued the calculus on philosophical grounds, nor will I. The infinite series has its place in mathematics, as does the limit. My argument is not that one cannot conceive of infinities, infinitesimals, or the like. My argument has been and will continue to be that the curve, whether it is a physical concept or a mathematical abstraction, cannot logically admit of the application of an infinite series, in the way of the calculus. In glossing the modern reaction to Berkeley’s views, Carl Boyer said, “Since mathematics deals with relations rather than with physical existence, its criterion of truth is inner consistency rather than plausibility in the light of sense perception or intuition.” (Boyer, Carl. B., The History of the Calculus and its Conceptual Development, p. 227) MM agree and stresses that his main point already advanced is that there is no inner consistency in letting a differential [f(x + i) – f(x)] approach a point when that point is already expressed by two differentials [(x-0) and (y-0)].

Boyer gives the opinion of the mathematical majority when he defends the instantaneous velocity in this way: “[Berkeley’s] argument is of course absolutely valid as showing that the instantaneous velocity has no physical reality, but this is no reason why, if properly defined or taken or taken as an undefined notion, it should not be admitted as a mathematical abstraction.” My answer to this is that physics has treated the instantaneous velocity as a physical reality ever since Newton did so. Beyond that, it has been accepted by mathematicians as an undefined notion, not as a properly defined notion, as Boyer seems to admit. He would not have needed to include the proviso “or taken as an undefined notion” if all notions were required to be properly defined before they were accepted as “mathematical abstractions.” The notion of instantaneous velocity cannot be properly defined mathematically since it is derived from an equation that cannot be properly defined mathematically. Unless Boyer wants to argue that all heuristics should be accepted as good mathematics (which position contemporary physics has accepted, and contemporary mathematics is closing in on), his argument is a non-starter.

Many mathematicians and physicists will maintain that the foundation of the calculus has been a closed question since Cauchy in the 1820’s, and that my entire thesis can therefore only appear Quixotic. However, as recently as the 1960’s Abraham Robinson was still trying to solve perceived problems in the foundation of the calculus. His nonstandard analysis was invented for just this purpose, and it generated quite a bit of attention in the world of math. The mathematical majority has not accepted it, but its existence is proof of widespread unease. Even at the highest levels (one might say especially at the highest levels) there continue to be unanswered questions about the calculus. My thesis answers these questions by showing the flaws underlying both standard and nonstandard analysis.

Newton’s original problems should have been stated like this: 1) Given a distance that varies over any number of equal intervals, find the velocity over any proposed interval. 2) Given a variable velocity over an interval, find the distance traveled over any proposed subinterval. These are the questions that the calculus really solves, as MM will prove below. The numbers generated by the calculus apply to subintervals, not to instants or points. Newton’s use of infinite series, like the power series, misled him to believe that curves drawn on graphs could be expressed as infinite series of (vanishing) differentials. All the other founders of the calculus made the same mistake. But, due to the way that the curve is generated, it cannot be so expressed. Each point on the graph already stands for a pair of differentials; therefore it is both pointless and meaningless to let a proposed differential approach a point on the graph.

Proof that the Current Derivative for Powers is False

This section will show that the proof of y' = nxn-1 is false. [To be clear, this is not to say the equation is false, only the proof.] Not only unnecessary, but false. Miles Mathis will re-prove it by a simpler and more transparent method.

There are many problems that can be solved by limits and infinities. (See Zeno's Paradox on MM site.), but Miles Mathis does not believe that calculus is one of them. The calculus can be solved by simple number relations, because that is what creates the equalities. As it turns out, proving the calculus with limits is not only unnecessary and inefficient, it is false. It breaks rules and finds fake numbers. It also warps fields and allows for particles and motions that cannot exist. The problems embedded in the calculus are what have caused many of the physical problems in the past century.

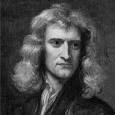

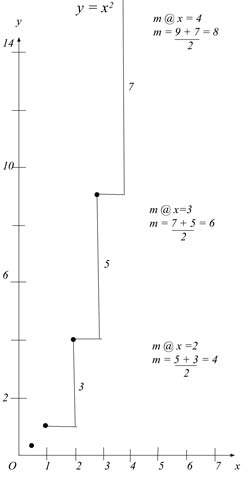

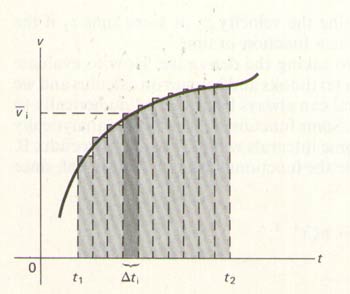

Currently, modern mathematicians use calculus to find a derivative and a slope of the tangent by taking Δx to zero. Instead straightening a curve into a line (the tangent), once they go below 1 for the change of the independent variable, they will have changed the curve. This is important, because unless you also monitor that change, you will get the wrong answer for your curve at x. What is meant by straight will be shown from the tables for x2 and x3.

Let Δx=1 (x= 1, 2, 3, 4...)

x2

= 1, 4, 9, 16, 25, 36, 49, 64, 81

x3

= 1, 8, 27, 64, 125, 216, 343

Δx2

= 3, 5, 7, 9, 11, 13, 15, 17, 19

Δx3

= 7, 19, 37, 61, 91, 127

ΔΔx2

= 2, 2, 2, 2, 2, 2, 2, 2, 2, 2

ΔΔx3

= 6, 12, 18, 24, 30, 36, 42

ΔΔΔx3

= 6, 6, 6, 6, 6, 6, 6, 6

Let Δx=.5

(x=.5, 1, 1.5, 2...)

x2

= .25, 1, 2.25, 4, 6.25, 9

x3

= .125, 1, 3.375, 8, 15.63

Let Δx=.25

(x=.25, .5, .75, 1...)

x2